A closer look at the Model Context Protocol

I think the main reason the Model Context Protocol (MCP)1 has become popular is its simplicity. Like any remote procedure call protocol, it allows us to call a function outside our own process. That function can run in a different process on the same machine or a different machine entirely. As such the remote function can be implemented in any language as long as the client and server use the same RPC protocol. Over the last decades, we have seen many approaches to RPC: Windows Component Object Model (COM), JavaRMI, and more recently gRpc, REST, or JSON-RPC.

MCP is building on top of JSON-RPC and is just a slightly LLM-flavored extension to the protocol. In the following walkthrough of a complete MCP exchange, I will highlight additions made to the original JSON-RPC specification.

Initializing the connection.

To start the MCP protocol, the client sends a request to the server with an initialization request. This request tells the server which MCP protocol version the client would like to use and what capabilities the client provides as well as general information about the client.

{

"jsonrpc": "2.0",

"id": 1,

"method": "initialize",

"params": {

"protocolVersion": "2024-11-05",

"capabilities": {

"sampling":{}

},

}

}

The server acknowledges the request and responds by saying: “Okay, cool, I can offer you tools.”. For some reason, they thought it necessary to rename functions to tools. So really it is saying “I can in principle execute some functions for you.”. Besides tools supported capabilities include logging, completion, prompts, or resources(accessing files). By omitting them we let the client know that this particular server only supports tool calling.

{...

"capabilities": {

"logging": {},

"tools": {

"listChanged": false

}

},

}

Besides the MCP-specific versioning and information exchange, what sets this apart from JSON-RPC is the listChanged parameter. If this is set to true, the server is capable of notifying the client if the list of provided tools changes. For the server to be able to talk to the client, the client first needs to establish an SSE stream. The client can choose to do so by calling a GET Endpoint on the server. If the server supports the resource capability, this provides an easy way for the client to keep up with file changes. If you want to build your own AI coding assistant this will be probably quite useful. Another interesting use case that the server-side events enable is sampling. Within MCP Sampling refers to requesting an LLM for completion or generation. This provides a standardized way for a mcp-server to request a LLM sampling through the client. The client is then responsible for forwarding a request from the mcp-server to an LLM. In some way this almost reverses the server-client relationship. But it allows the tool provider to use LLMs without hosting them. This means tools can stay in untouched when new LLM models get deployed through the client.

Allow the client to look up functions

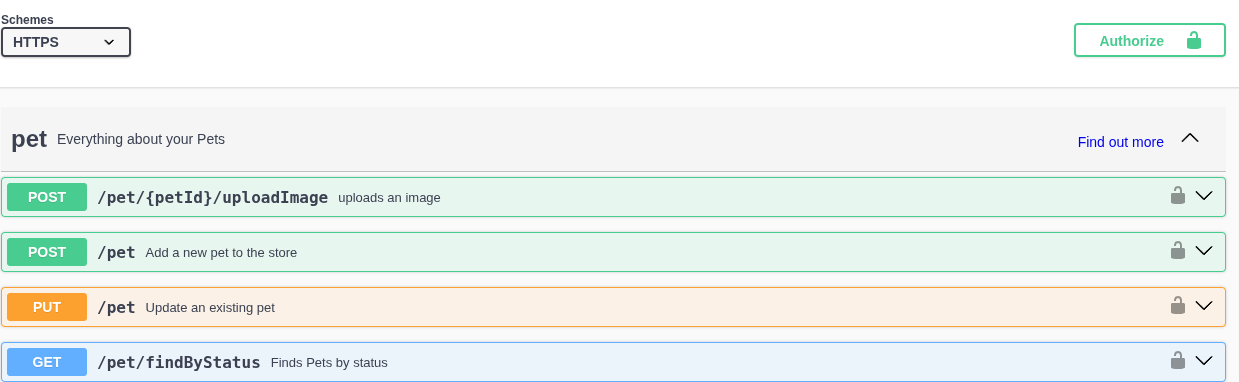

Most RPC protocols have a standardized way of publishing which methods a server provides. For example, in COM or gRPC this publication of endpoints is part of the protocol specification with Interface Definition Language (IDL) and Protocol Buffers respectively. Other protocols, mostly those from the web domain, are not formed by careful consideration but rather by the minimal common denominator. As such, both REST interfaces and JSON-RPC do not have a built-in approach to publishing endpoint definitions. If you want to use these protocols directly, you are assuming that the client has prior knowledge of things like the server URLs, method names, and parameters. This is of course not manageable beyond very small projects. As such, most of these have received de facto standards for exchanging endpoint definitions. For REST APIs, the OpenAPI specification has become the default, and you have probably seen its visual representation, Swagger UI.

With the Swagger UI, the human was the intended consumer of the API. However, with MCP the intent consumer is a language model. In a way, large language models could act as an implicit facade3 to different APIs. Instead of abstracting the functionality we need into a separate internal interface, we can simply put a language model in between our application and external APIs. We have to make sure to describe the internal functionality we need in plain English to a language model. Then the language model will then (hopefully) pick out the right function to achieve each task. If the API changes, or we switch to a different provider with a new interface, the language model may still succeed in selecting the correct endpoints, provided our descriptions are clear and detailed enough. For now, we probably want to use it on a smaller scale to add some fuzzy logic to our code.

For JSON-RPC there is an OpenRPC Standard to publish endpoints. MCP introduces its own lookup instead. Each capability has a list method that lists the content of that capability. We can look up the tools that a server provides by calling the following after we initialize a session.

{...

"method": "tools/list",

"params": {

"cursor": "optional-cursor-value"

}

}

And the server will answer: “I have the following functions: 1. A function that can provide you information about the current user”:

{...

"result": {

"tools": [

{

"name": "get_user_information",

"title": "Get Information about the current user.",

"description": "Get at least the name, age, address of the current user.",

"inputSchema": {

"type": "object",

"properties": {

},

"required": []

}

}

]}

This allows the mcp-server to specify the parameters and return types of each function that they offer. Similarly, we can list files if the server supports the resource capability. If we get a list changes notification, it is on the client to request this list again to see what actually changed. Other capabilities support this listing in a similar fashion.

Calling a tool (function)

The client now knows the description and the name of each tool. It can use this information to request the MCP server to execute a function.

{

"jsonrpc": "2.0",

"id": 2,

"method": "tools/call",

"params": {

"name": "get_user_information",

"arguments": {}

}

}

And the server will simply respond with whatever information it has about the user.

{...

"result": {

"content": [

{

"type": "text",

"text": "Name: Jan Scheffczyk

\nAge: 30, born 23.09.1994

\nAddress: Germany

12345 SampleCity

RandomStreet 2"

}],

"structuredContent": {

"name": "Jan Scheffczyk",

"age": "30",

"birthdate": "1994-09-23",

"address": "Germany 12345 SampleCity RandomStreet 2"

},

"isError": false

}

}

In practice, the decision of which function to call is often delegated to the language model itself. To enable this, the client must supply the available function descriptions and definitions in the tool-calling or function-calling format that the model expects. The LLM will then generate tokens until it produces a special token that signals a function call, followed by the name of the method it intends to invoke and the corresponding parameters. The client can then parse these tokens to execute the code above.

Implementation

As a proof of concept I implemented a MCP server in Go without any SDK. All it took was a day or two of fiddling around. I now have something that I can now simply plug into existing systems and it actually works. And with an SDK this would probably be done an hour or so.

The MCP inspector was very useful for testing:

DANGEROUSLY_OMIT_AUTH=true npx @modelcontextprotocol/inspector

Functions vs Agents

We have seen that at its core MCP provides a protocol that allows us(the client) to ask a mcp-server what functions it has and what they look like. Then we can ask the server to execute the function for us and give us the result. Therefore, MCP is an interface mostly used to integrate the fuzzy logic enabled by LLMs with traditional, deterministic programming.

We can use LLMs to make decisions that would be difficult to implement with conventional code. For example, consider the logic: “Based on the user’s last sentence, display a new data visualization if they would benefit from seeing it.” Determining user “benefit” is hard to put into code. By treating LLMs as functions, we can outsource the fuzzy, decision-making parts of the program to LLMs while keeping the logic for the parts that have to be correct. For example, we do not want our bank transfers to be handled directly by LLMs no matter how good and cheap they get. We have normal code whose calculations can be verified through formal proof, making it the most reliable and effective tool for these jobs. LLMs are inherently stochastic4 and as such there will always be a chance for failure. As such anything that involves transactions will never migrate to LLM. Same for strict business processes that have to be followed exactly. We will always use conventional code when we need exact outcomes. They do however enable contextual decision-making that was difficult to impossible before.

We can design such LLM-enabled functions to solve a specific task. We determine the inputs necessary to solve the task and provide the LLM with a task description (the prompt) and the inputs. The combination of code and LLMs creates a directed call graph. Running the program will take a specific path through that graph. This is essentially the same as a stack trace. The stack trace is the path of a specific execution took from the entry node main() to the current state. The only difference is that some nodes use LLMs to decide how to continue rather than normal code. This means we can debug and reason through the problem in most of the same ways we do for code.

Unlike functions, agents allow us to define the task even more vaguely. Instead of giving it all the necessary inputs right away, we give agents the ability to ask clarifying questions to their caller. Agent A might delegate a task to Agent B, only for Agent B to ask a clarifying question back to Agent A. This introduces loops to our call graph making it more difficult to understand, reason through, and debug the program. This also means that the callers of the agent are capable of providing feedback. They need to be aware of what the result should look like. Most professionals will have experienced that customers might not have an exact idea of the result they want. It is a more general idea of a solution. However, agents assume that the human or agent caller knows exactly what they want. If this feedback turns out to not accurate it muddies our entire execution path. So once we introduce agents we are dealing with vague tasks and the call graph full of (potential) loops.

My gut feeling is that it will be very difficult to develop a robust application with agents. When something inevitably goes wrong there are too many paths to keep track of. We have to sift through tons of generated conversations between agents to then guess how we might improve a prompt to fix the issue. There will be a suite of tools that promise to make complexity manageable. To me, this is similar to the microservice approach. If microservices did not work for your team or project proponents will point out a tool or automation that you did not set up sufficiently. They argue that the issue is not with the complexity introduced by the microservice architecture but with you. I expect that there will be (maybe there already is?) an ecosystem of tools to monitor and guardrail multi-agent systems. Most application problems can be sufficiently described to use traditional code and LLM as functions. So if given the opportunity I would avoid multi-agent systems just like I avoid micro-services until I have a painful need that would be solved by either.

3. Facade pattern ↩

4. We can turn off stochastic sampling by setting a temperature of zero, but we cannot change that it has been trained on a large dataset and that we might encounter samples that fall off the training dataset and that didn’t generalize correctly. In essence, unless we can test the entire input domain to be correct (in which case, why do we even need a large language model?), we cannot be sure that there are no spaces in the input space that yield wildly unrealistic results because they are out-of-training-distribution. ↩