Remind yourself that ChatGPT is a slow and insidious killer

It feels like the tide is shifting. Influencers like The primagen have gone from being critical about LLM code assistants to downright dismissing them. And on r/programming I came across three posts condemning vibe coding in the last week alone. Criticism of LLM integrations in IDEs seems well-established, and I imagine most of us can’t use tools like Cursor or GitHub Copilot at work anyways. So instead I want to talk about how ChatGPT, Claude & Co change the way we search for answers.

LLMs are now the first choice

Instead of googling an error message you paste it in ChatGPT. Instead of reading through a package documentation you ask gemini on how to use it. Instead of finding an example implementation you ask claude to generate one for you.

Is that a bad thing? Some argue that LLMs are not competent enough — that they make too many mistakes. However, in my experience, LLM-generated answers are useful for 80–90% of my everyday coding problems. They often help me solve issues quickly and I can move on1. They have become so useful that they are now my first choice when I run into a problem. Before I try anything else, I copy the error and paste it into a chatbox. The error is translated into an easily understandable explanation and in often it even comes with a solution.

When solving a isolated problem this is a perfect solution. But as I have grown more reliant on LLMs, I have started noticing a few patterns.

LLM answers are too specific.

When going through documentation or man pages it will take you a bit of time to find the parameter you were looking for. While it might not feel like it that but this extra time is not wasted. You unintentionally explore parts of the documentation adjacent to your problem. You become aware of other parameters and functions. And perhaps because it is more tedious I actually retain some of that new found knowledge.

LLM answers are too good.

The answers are already adjusted for your specific context. Before I would have to read through a StackOverflow answer. Understand how the answer works and map it to my own problem. As such there was at least a bit of cognitive processing involved. I believe that this processing and spending time on the problem is essential to retaining knowledge. The answers from ChatGPT work but i simply skim them for the solution. I extract the solution and promptly forget about it.

LLM answers are too easy.

Getting answers very quickly provides an new anchor in your mind. It establishes how long a certain task should take. The other day I had Claude generate a small neural network architecture, training loop and data loading for me. In total maybe 700 lines of code. This took maybe 10-15min and 2 iterations to get it to „work“. Of course as generated code goes it doesn’t quite do what is was supposed to … and I don’t understand how it works. So scratching the whole thing and starting from a blank project I noticed that I got impatient after 1-2h. I felt like I should be faster. Like I am wasting time. I know that it is ridiculous but I can not help but to compare my manual efforts to the blistering speed of claude. Interestingly studies indicate that individuals under time pressure favor simpler heuristics and shortcuts in order to a complete tasks. In other words I am now even more prone to return to ChatGPT for help because of my self imposed time pressure. It degrades my ability to just sit with a problem until it is solved. Before ChatGPT I felt a rush of joy and pride when I solved a problem after sitting with it for a long time. Now I feel ashamed for taking so much longer than my brain anticipated. Considering that humans already favour comfort and convince this is quite the vicous circle. For me this might be the main argument to reduce any form of LLM use and code generation.

Beyond programming

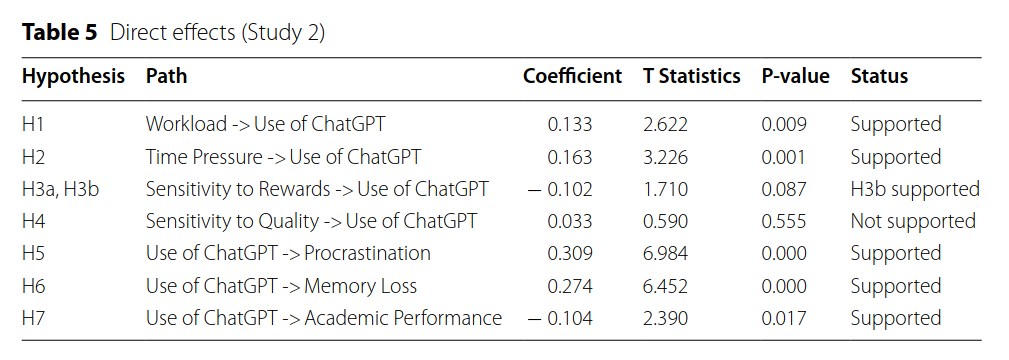

I went out of my way to find a study that confirms my own bias. They questioned around 500 university students and asked them how much they use ChatGPT. Then they compared the average grades of the students and found a negative correlation between the self reported ChatGPT use and academic performance. In other words using ChatGPT more frequently indicate worse test scores. Further, those students that reported to be using ChatGPT were also more likely to report problems with memory retention.

They also examined additional hypotheses, such as whether time pressure or high workload increases the likelihood of using ChatGPT, or if its use is correlated with procrastination. These findings might be less surprising, but I found the descriptions of the individual hypotheses quite informative. Below is a list of the hypotheses that have been tested:

Conclusion

I am not suggesting to ditch LLM tools entirely. But we should be aware of the tradeoffs. There is always a balance between productivity and learning. If you were to implement everything from scratch with no libraries in C you would probably learn a lot. But for most tasks it would take too much time to get anything useful done. However, if you really want to understand how something works the best way is to build it yourself.

The crux of the matter is that while LLMs save us time, learning itself requires time and struggle. The better LLMs get the less time we spend and the less we struggle. You can either go fast or you can go far.

1. Your judgment may vary but for the sake of the argument let’s just assume that LLMs can help in most cases. ↩